Generative AI Engineer — Interview Questions and How to Prepare for Interview.

Introduction

Generative AI is transforming various fields, from image synthesis to natural language processing. As a Generative AI Engineer, you’ll need to demonstrate both a deep understanding of generative models and the ability to solve complex problems. In this blog, we’ll explore key interview questions for generative AI engineering roles and provide tips on how to prepare for them effectively.

1. Understanding Generative Models

Q: What are generative models, and how do they differ from discriminative models?

Answer:

Generative models learn the distribution of data and can generate new data samples, while discriminative models focus on classifying data or predicting outcomes based on input features. Generative models include Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), whereas discriminative models include logistic regression and support vector machines.

Preparation Tips:

- Study: Review the theoretical foundations of both generative and discriminative models.

- Resources: Use online courses, textbooks, and research papers to deepen your understanding.

2. Architecture and Working of GANs

Q: Can you explain how a Generative Adversarial Network (GAN) works?

Answer:

A GAN consists of two neural networks: the Generator and the Discriminator. The Generator creates fake data samples from random noise, while the Discriminator evaluates whether the data is real or fake. The Generator and Discriminator are trained adversarially, with the Generator aiming to produce realistic data and the Discriminator trying to distinguish between real and fake data.

Preparation Tips:

- Hands-On Practice: Implement GANs using frameworks like TensorFlow or PyTorch.

- Conceptual Understanding: Focus on the adversarial training process and the balance between the Generator and Discriminator.

3. Evaluating Generative Models

Q: What metrics would you use to evaluate the quality of generated text, images, or other outputs?

Answer:

For text, metrics like BLEU, ROUGE, and perplexity are commonly used. For images, Inception Score (IS) and Fréchet Inception Distance (FID) are useful. Tailor the evaluation metrics to the specific type of generative model and output you’re working with.

Preparation Tips:

- Research: Familiarize yourself with common metrics and their applications.

- Experiment: Evaluate models using different metrics to understand their impact on output quality.

4. Handling Overfitting and Underfitting

Q: How do you handle overfitting and underfitting in generative AI models?

Answer:

For overfitting, techniques like dropout, regularization, and early stopping can be employed. For underfitting, increase model complexity, provide more features, or train for longer periods. Ensuring a diverse and sufficient dataset is crucial for both issues.

Preparation Tips:

- Experiment: Test different regularization techniques and model architectures.

- Case Studies: Review real-world cases where these issues were addressed successfully.

5. Working with Large-Scale Datasets

Q: How do you handle large-scale datasets for training generative models?

Answer:

Utilize distributed training, data sharding, and efficient data loading techniques. Leveraging scalable storage solutions and parallel processing can also help manage large datasets effectively.

Preparation Tips:

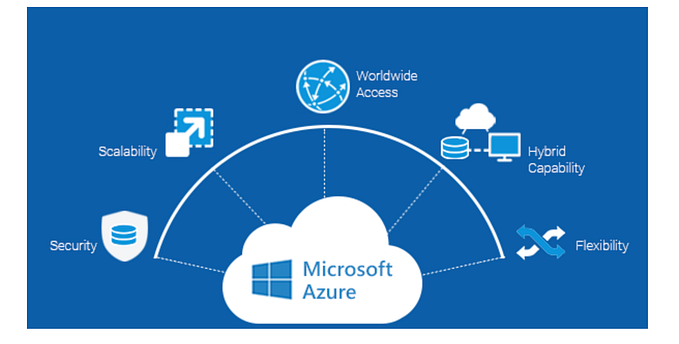

- Practice: Work with large datasets in a cloud environment.

- Tools: Familiarize yourself with tools and frameworks that support distributed training.

6. Frameworks and Libraries

Q: What frameworks or libraries have you used for developing generative models? What are the advantages and disadvantages of each?

Answer:

Common frameworks include TensorFlow, PyTorch, and Hugging Face Transformers. TensorFlow is robust with extensive documentation, while PyTorch offers flexibility and ease of use. Hugging Face Transformers provide pre-trained models and APIs but are limited to available models.

Preparation Tips:

- Experience: Gain hands-on experience with different frameworks.

- Comparison: Understand the strengths and limitations of each framework.

7. Model Optimization

Q: How would you optimize the performance of a generative model for a specific task, like image generation or text generation?

Answer:

Optimize by tuning hyperparameters, adjusting model architecture, and using techniques specific to the task, such as data augmentation for images or fine-tuning pre-trained models for text.

Preparation Tips:

- Case Studies: Analyze successful optimization strategies for similar tasks.

- Tools: Use hyperparameter tuning tools and libraries.

8. Ensuring Responsible AI Use

Q: How do you ensure that the generative models you develop are used responsibly and do not perpetuate bias or misinformation?

Answer:

Implement bias detection and mitigation strategies, follow ethical guidelines, ensure transparency, and involve human oversight to address potential misuse or unintended consequences.

Preparation Tips:

- Ethics: Study ethical considerations in AI development.

- Practices: Learn about best practices for bias detection and mitigation.

9. Staying Updated

Q: How do you keep up with the latest developments in the field of generative AI?

Answer:

Stay updated by reading research papers, following industry blogs, attending conferences, participating in online communities, and engaging with professional networks.

Preparation Tips:

- Subscriptions: Subscribe to relevant journals and newsletters.

- Networking: Connect with experts in the field through conferences and forums.

10. Troubleshooting Complex Issues

Q: Describe a time when you had to troubleshoot a complex issue in a machine learning model. What was the problem, and how did you resolve it?

Answer:

Provide specific details about the problem, your investigative process, and the solution. Highlight your problem-solving skills and ability to diagnose and address issues effectively.

Preparation Tips:

- Documentation: Keep detailed records of past projects and challenges.

- Reflection: Reflect on your problem-solving experiences and prepare to discuss them.

Q1. Explain the architecture of large language models (LLMs) like GPT-3.

Large language models like GPT-3 are based on the Transformer architecture, which consists of an encoder-decoder structure, but GPT-3 uses only the decoder part. Here’s a brief breakdown:

- Attention Mechanism: Uses self-attention to weigh the relevance of different words in a sentence to each other, allowing the model to understand context and relationships.

- Layers: GPT-3 has 96 layers of transformers, each consisting of multi-head self-attention mechanisms and feed-forward neural networks.

- Feed-forward Networks: These are fully connected layers that transform input data to output data after the attention mechanism.

- Positional Encoding: Adds positional information to the input embeddings to account for the order of words in a sentence.

- Output: Produces a probability distribution over the vocabulary for the next word prediction.

Q2. How do you evaluate the quality of a foundation model?

Evaluating the quality of a foundation model involves several aspects:

- Performance Metrics: For language models, metrics like perplexity, accuracy, BLEU score (for translation), and F1 score (for classification tasks) are used.

- Benchmarking: Comparing the model’s performance against standard benchmarks or datasets (e.g., GLUE, SQuAD).

- Human Evaluation: Assessing the quality of generated outputs through human judgment to ensure relevance, coherence, and usefulness.

Q3. What metrics would you use to evaluate the quality of generated text, images, or other outputs?

- Text: BLEU, ROUGE, METEOR, perplexity, human judgment (for coherence, relevance, and readability).

- Images: Inception Score (IS), Fréchet Inception Distance (FID), human judgment (for realism and quality).

- Other Outputs: Custom metrics based on the specific domain, such as accuracy for classification tasks or diversity metrics for generative tasks.

Q4. How do you handle overfitting and underfitting in Gen AI models?

- Overfitting: Use techniques like dropout, regularization, data augmentation, and early stopping. Ensuring diverse and sufficient training data also helps.

- Underfitting: Increase model complexity, provide more features, or train for longer. Improving data quality and preprocessing can also help.

Q5. How do you handle large-scale datasets for training generative models?

- Distributed Training: Use distributed computing resources and parallel processing.

- Data Sharding: Divide the dataset into manageable chunks.

- Efficient Data Loading: Implement efficient data loading and preprocessing pipelines.

- Storage Solutions: Utilize scalable storage solutions like cloud storage.

Q6. What frameworks or libraries have you used for developing generative models? What are the advantages and disadvantages of each?

- TensorFlow: Widely used, supports various models, good documentation. Can be heavy and complex.

- PyTorch: Flexible and user-friendly, strong support for dynamic computation graphs. Slightly less mature than TensorFlow in some areas.

- Hugging Face Transformers: Provides pre-trained models and easy-to-use APIs. Limited to models available in the library.

- Keras: High-level API, easy to use, integrates well with TensorFlow. Less control over lower-level operations.

Q7. How would you optimize the performance of a generative model for a specific task, like image generation or text generation?

- Hyperparameter Tuning: Adjust parameters like learning rate, batch size, and network architecture.

- Model Architecture: Tailor the architecture to the task (e.g., GANs for image generation, Transformers for text).

- Training Data: Use high-quality, relevant, and diverse data.

- Regularization and Data Augmentation: Apply techniques to prevent overfitting and enhance generalization.

Q8. How do you ensure that the generative models you develop are used responsibly and do not perpetuate bias or misinformation?

- Bias Detection: Regularly test models for biases and implement debiasing techniques.

- Ethical Guidelines: Follow ethical guidelines and best practices for AI development.

- Transparency: Document model training processes, data sources, and potential limitations.

- Human Oversight: Implement human oversight mechanisms to monitor and address misuse or unintended consequences.

Q9. How do you keep up with the latest developments in the field of generative AI?

- Research Papers: Read recent papers from conferences like NeurIPS, ICML, and CVPR.

- Blogs and News: Follow industry blogs, news, and updates from AI organizations and research groups.

- Online Courses and Workshops: Participate in online courses, webinars, and workshops.

- Community Engagement: Engage with online AI communities, forums, and social media.

Q10. What are generative models, and how do they differ from discriminative models?

- Generative Models: Learn the distribution of the data and can generate new data samples. Examples include GANs and VAEs.

- Discriminative Models: Focus on classifying data or predicting outcomes based on input features. Examples include logistic regression and SVMs.

Q11. Can you explain how a Generative Adversarial Network works?

A GAN consists of two neural networks:

- Generator: Creates fake data samples from random noise.

- Discriminator: Evaluates whether the data samples are real or fake. The generator and discriminator are trained adversarially: the generator tries to produce realistic data to fool the discriminator, while the discriminator tries to correctly identify real versus fake data. This competition improves both models over time.

Q12. What are some common challenges in training generative models and how do you address them?

- Mode Collapse: When the generator produces limited varieties of outputs. Address by using techniques like feature matching and mini-batch discrimination.

- Training Stability: GANs, in particular, can be unstable. Techniques like Wasserstein loss and gradient penalty can help.

- Data Quality: Poor quality data affects the model’s performance. Improve data quality through cleaning and augmentation.

Q13. Describe a recent project where you implemented a generative model. What were the goals, and what were the outcomes?

Provide specific details about the project, including:

- Goals: What you aimed to achieve (e.g., generating realistic images, improving text generation).

- Implementation: The models and techniques used.

- Outcomes: The results and any improvements observed.

Q14. You are given a dataset with missing values and noisy data. How would you prepare this data for training a generative model?

- Imputation: Fill missing values using techniques like mean imputation, interpolation, or more sophisticated methods.

- Noise Reduction: Apply filtering techniques to clean noisy data.

- Data Augmentation: Generate additional data to compensate for missing or noisy samples.

- Feature Engineering: Transform features to better suit the generative model.

Q15. Imagine you need to create a generative model for text. What considerations would you take into account, and which models might you use?

- Considerations: Tokenization, handling of long-range dependencies, and context understanding.

- Models: Transformer-based models (like GPT-3), RNNs, and LSTMs.

Q16. Describe a time when you had to troubleshoot a complex issue in a machine learning model. What was the problem, and how did you resolve it?

Provide details on:

- Problem: Describe the specific issue.

- Investigation: How you diagnosed the problem.

- Resolution: The steps taken to fix the issue and the outcome.

Q17. How do you stay updated with the latest developments in generative AI?

- Research Publications: Regularly review recent papers and publications.

- Conferences and Workshops: Attend relevant events and conferences.

- Online Communities: Engage with online forums and social media related to AI.

- Professional Networks: Connect with experts and practitioners in the field.

Q18. How would you monitor the performance of your deployed model and handle issues such as model drift over time?

- Monitoring Tools: Use tools and dashboards to track model performance metrics in real-time.

- Model Drift Detection: Implement strategies to detect and handle changes in data distribution.

- Regular Retraining: Schedule periodic retraining or fine-tuning of the model with updated data.

Q19. What steps would you take to ensure transparency and fairness in the decision-making process of your AI system?

- Documenting: Maintain thorough documentation of model development, data sources, and decision-making processes.

- Bias Audits: Regularly audit the model for biases and fairness.

- Stakeholder Engagement: Involve diverse stakeholders in the development and evaluation process.

- Explainability: Use explainable AI techniques to make model decisions understandable to users.

Scenario Based Questions

- Scenario: You’ve developed a machine learning model in Python that needs to be deployed in a production environment. What factors would you consider when choosing between different deployment options (e.g., containerization vs serverless)?

- Q:-How would you monitor the performance of your deployed model and handle issues such as model drift over time?

- Scenario: You’re implementing a reinforcement learning algorithm in Python to train an agent to play a complex video game. How would you structure your code to balance between exploration and exploitation?

- Q:-Discuss potential challenges and strategies for dealing with the curse of dimensionality in reinforcement learning problems.

- Scenario: You’re part of a team developing an AI-powered recommendation system. How would you identify and mitigate potential biases in the dataset and the model itself using Python?

- Q:-What steps would you take to ensure transparency and fairness in the decision-making process of your AI system?

- Scenario: You’re developing a contextual AI model in Python that can understand and respond appropriately to user queries based on context from previous interactions. Explain the architecture and training process for such a model.

- Q: How would you handle scenarios where the context provided by previous interactions is ambiguous or incomplete, ensuring accurate contextual understanding?

- Scenario: You’re part of a team tasked with building a multilingual translation system using Python. How would you approach the integration of multiple languages and ensure high translation accuracy?

- Q: What challenges arise in translating idiomatic expressions and culturally specific language nuances, and how would you address them in your translation model?

- Scenario: You’re working on a project to analyze sentiment from social media posts using Python. Describe the pipeline and algorithms you would use to perform sentiment analysis effectively.

- Q: How would you address the challenge of sentiment ambiguity, where the sentiment expressed in a text could be subjective or context-dependent?

- Scenario: You’re developing a conditional text generation model in Python that can generate personalized product recommendations based on user preferences. Describe the architecture and training strategy for such a model.

- Q: Discuss methods for controlling the attributes or style of generated content (e.g., sentiment, tone) in conditional generation tasks, and potential applications beyond recommendation systems.

- Scenario: You’re tasked with developing a GAN model in Python to generate photorealistic images of human faces. How would you approach training the GAN architecture and handling challenges such as mode collapse and training instability?

- Q: Discuss techniques to evaluate the quality of generated images and strategies to improve the diversity and realism of generated outputs.

Coding Questions

- Q:-Develop a Python script to train a convolutional neural network (CNN) for image segmentation. Your task is to accurately segment objects of interest in medical images or satellite photos using a popular deep learning framework.

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Conv2D, MaxPooling2D, UpSampling2D, concatenate

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.losses import SparseCategoricalCrossentropy

from tensorflow.keras.metrics import MeanIoU

import numpy as np

import matplotlib.pyplot as plt# Define the U-Net model for image segmentation

def unet_model(input_shape):

inputs = Input(shape=input_shape) # Encoding path

c1 = Conv2D(64, (3, 3), activation='relu', padding='same')(inputs)

c1 = Conv2D(64, (3, 3), activation='relu', padding='same')(c1)

p1 = MaxPooling2D((2, 2))(c1) c2 = Conv2D(128, (3, 3), activation='relu', padding='same')(p1)

c2 = Conv2D(128, (3, 3), activation='relu', padding='same')(c2)

p2 = MaxPooling2D((2, 2))(c2) c3 = Conv2D(256, (3, 3), activation='relu', padding='same')(p2)

c3 = Conv2D(256, (3, 3), activation='relu', padding='same')(c3)

p3 = MaxPooling2D((2, 2))(c3) c4 = Conv2D(512, (3, 3), activation='relu', padding='same')(p3)

c4 = Conv2D(512, (3, 3), activation='relu', padding='same')(c4)

p4 = MaxPooling2D((2, 2))(c4) # Bottleneck

c5 = Conv2D(1024, (3, 3), activation='relu', padding='same')(p4)

c5 = Conv2D(1024, (3, 3), activation='relu', padding='same')(c5) # Decoding path

u6 = UpSampling2D((2, 2))(c5)

u6 = concatenate([u6, c4])

c6 = Conv2D(512, (3, 3), activation='relu', padding='same')(u6)

c6 = Conv2D(512, (3, 3), activation='relu', padding='same')(c6) u7 = UpSampling2D((2, 2))(c6)

u7 = concatenate([u7, c3])

c7 = Conv2D(256, (3, 3), activation='relu', padding='same')(u7)

c7 = Conv2D(256, (3, 3), activation='relu', padding='same')(c7) u8 = UpSampling2D((2, 2))(c7)

u8 = concatenate([u8, c2])

c8 = Conv2D(128, (3, 3), activation='relu', padding='same')(u8)

c8 = Conv2D(128, (3, 3), activation='relu', padding='same')(c8) u9 = UpSampling2D((2, 2))(c8)

u9 = concatenate([u9, c1])

c9 = Conv2D(64, (3, 3), activation='relu', padding='same')(u9)

c9 = Conv2D(64, (3, 3), activation='relu', padding='same')(c9) outputs = Conv2D(1, (1, 1), activation='sigmoid')(c9) model = Model(inputs=[inputs], outputs=[outputs])

return model# Load your dataset here

def load_data():

# Placeholder function for loading data

# Replace with your actual data loading code

x_train = np.random.rand(10, 256, 256, 3) # Example image data

y_train = np.random.randint(0, 2, (10, 256, 256, 1)) # Example masks

return x_train, y_train# Main function to train the model

def main():

input_shape = (256, 256, 3)

model = unet_model(input_shape) model.compile(optimizer=Adam(),

loss=SparseCategoricalCrossentropy(from_logits=True),

metrics=[MeanIoU(num_classes=2)]) x_train, y_train = load_data() history = model.fit(x_train, y_train, epochs=10, batch_size=2, validation_split=0.1) # Plot training & validation loss values

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show() # Save the model

model.save('unet_model.h5')if __name__ == '__main__':

main()

Q:-Write an optimized Python function to compute the Levenshtein distance (edit distance) between two strings. Consider efficient algorithms and data structures to handle large inputs and minimize time complexity.

def levenshtein_distance(s1: str, s2: str) -> int:

len_s1, len_s2 = len(s1), len(s2)# Create a matrix to store distances

dp = [[0] * (len_s2 + 1) for _ in range(len_s1 + 1)] # Initialize the matrix

for i in range(len_s1 + 1):

dp[i][0] = i

for j in range(len_s2 + 1):

dp[0][j] = j # Compute the Levenshtein distance

for i in range(1, len_s1 + 1):

for j in range(1, len_s2 + 1):

cost = 0 if s1[i - 1] == s2[j - 1] else 1

dp[i][j] = min(dp[i - 1][j] + 1, # Deletion

dp[i][j - 1] + 1, # Insertion

dp[i - 1][j - 1] + cost) # Substitution return dp[len_s1][len_s2]# Example usage

s1 = "kitten"

s2 = "sitting"

print(f"The Levenshtein distance between '{s1}' and '{s2}' is {levenshtein_distance(s1, s2)}")

Conclusion

Preparing for a generative AI engineer interview requires a deep understanding of generative models, practical experience with frameworks, and awareness of ethical considerations. By studying these key questions and following the preparation tips, you can demonstrate your expertise and readiness for the role.

About Me

As businesses move towards cloud-based solutions, I provide my expertise to support them in their journey to the cloud. With over 15 years of experience in the industry, I am currently working as a Google Cloud Principal Architect. My specialization is in assisting customers to build highly scalable and efficient solutions on Google Cloud Platform. I am well-versed in infrastructure and zero-trust security, Google Cloud networking, and cloud infrastructure building using Terraform. I hold several certifications such as Google Cloud Certified, HashiCorp Certified, Microsoft Azure Certified, and Amazon AWS Certified.

Multi-Cloud Certified :

1. Google Cloud Certified — Cloud Digital Leader.

2. Google Cloud Certified — Associate Cloud Engineer.

3. Google Cloud Certified — Professional Cloud Architect.

4. Google Cloud Certified — Professional Data Engineer.

5. Google Cloud Certified — Professional Cloud Network Engineer.

6. Google Cloud Certified — Professional Cloud Developer Engineer.

7. Google Cloud Certified — Professional Cloud DevOps Engineer.

8. Google Cloud Certified — Professional Security Engineer.

9. Google Cloud Certified — Professional Database Engineer.

10. Google Cloud Certified — Professional Workspace Administrator.

11. Google Cloud Certified — Professional Machine Learning.

12. HashiCorp Certified — Terraform Associate

13. Microsoft Azure AZ-900 Certified

14. Amazon AWS-Practitioner Certified

I assist professionals and students in building their careers in the cloud. My responsibility is to provide easily understandable content related to Google Cloud and Google Workspace,aws .azure. If you find the content helpful, please like, share and subscribe for more amazing updates. If you require any guidance or assistance, feel free to connect with me.

YouTube:https://www.youtube.com/@growwithgooglecloud

Topmate :https://topmate.io/gcloud_biswanath_giri

Medium:https://bgiri-gcloud.medium.com/

Telegram: https://t.me/growwithgcp

Twitter: https://twitter.com/bgiri_gcloud

Instagram:https://www.instagram.com/multi_cloud_boy/

LinkedIn: https://www.linkedin.com/in/biswanathgiri/

GitHub:https://github.com/bgirigcloud

Facebook:https://www.facebook.com/biswanath.giri

Linktree:https://linktr.ee/gcloud_biswanath_giri

and DM me,:) I am happy to help!!